About

As ecological data and the threats facing ecosystems grow in size and complexity, ecologists increasingly turn to artificial intelligence (AI) for data processing, inference, and decision-making. However, with this acceleration and opportunity comes significant scientific, ethical, and practical considerations. We formed this community to discuss the practical barriers to AI implementation in ecological studies, specifically ecologists’ hesitation to use AI due to unclear relevance, opportunity costs, implementation burden, transparency deficit, and incentive shortages. During the workshop, we developed practical recommendations for AI tool development and witnessed firsthand the benefit of educational resources, communities of practice, visualization, cyberinfrastructure, and science communication to overcome practical barriers.

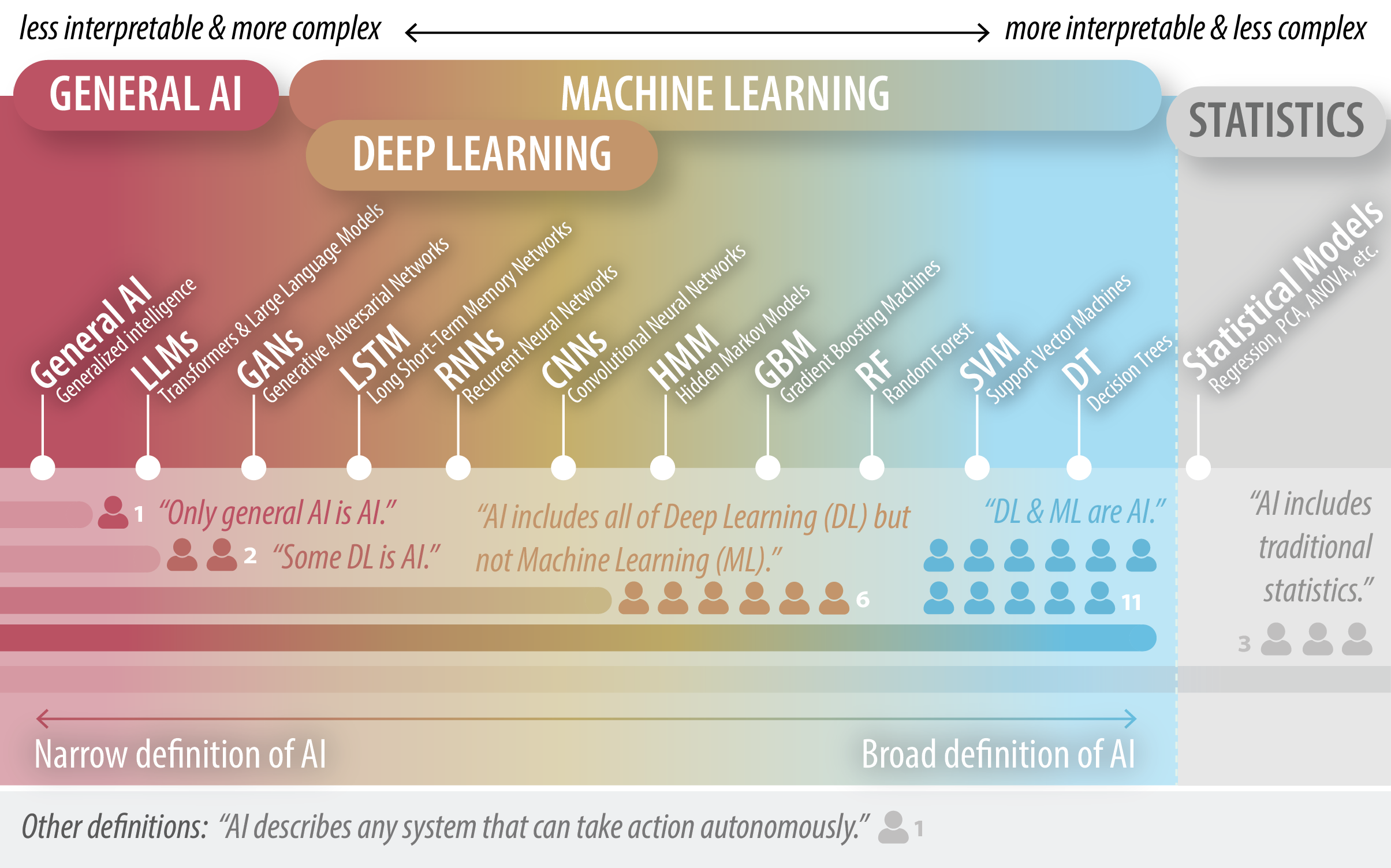

What is AI, anyway?

The diverse backgrounds, expertise, and perspectives of our group were reflected by our different definitions of the terms central to this paper - starting with the term AI (Fig 1). In ecology and science more broadly, AI, machine learning, and data science are now often used interchangeably. Strict definitions of AI may preclude machine learning and even deep learning or be restricted to AI systems that seemingly replicate human behavior or, minimally, enable flexible prediction making in light of sensory information. We define AI broadly to include the spectrum of machine learning and deep learning models available to ecologists (Fig 1). As in any sub-field, ecologists should use the methods that best align with their scientific question, rather than selecting for novelty or complexity.